Code comes and goes, comments are forever.

Blog

Bones

March 6th, 2026 (permalink)

There's an episode of Lucifer where he watches all 12 seasons of Bones without sleeping. I noticed that Bones appeared on Netflix at some point and figured what the hey.

It took me about a month. And it probably wasn't good for my mental health. I mean, the series is okay, but watching the 12 seasons back to back wasn't.

And when I say it was okay, that's what it was. There were no terrible episodes. There were no amazing episodes. It was just... okay.

A common pattern (especially over season ending and the next starting) is that Something Dramatic is Happening that will Change Everything. Like someone wanting to quit. Someone getting sick. Or going to prison. Or a breakup, or something. Maybe a conspiracy, or an addict ruining their lives. Or a serial killer or two. You know the drill.

Two episodes later everything is fine, back to work everyone..

There's a lot of tech-magic which is completely excusable, but sometimes there's stuff that makes zero sense internally in the series: you introduce some minor side character for a joke, and then dozen episodes later that character is still around, in a biohazard suit, in the lab, without having any logical reason to be there.

There are some actual changes to the characters over the series, sure - some get married and get children, for example - but nothing that affects the "monster of the week" structure of the series. There are some arcs but they aren't all that serious, honestly. And even when there are, the episodes still follow the monster of the week structure, there's just several episodes of the same "case". I think there's only two episodes that don't follow the formula, one is a film noir fun episode and the other is the second to last episode that is told in a non-linear fashion.

I played about 250 rounds of Hexcells Infinite while watching the show.

Some other episodes of note.. there was a twitter episode, which was rather cringy. On several episodes around season 7 or so there was a blatant product placement about driving assistant in some specific car model.

In season 9 episode 5 the team is given an AI assistant, which everyone hates, it hallucinates the results and is sent back - not something you'd see in the real world, funnily enough.

In season 8 (aired in 2013) there's a joke about how ridiculous it would be if Trump, a known criminal, would consider running for president.

Oh, and the super-meta episode (season 7 episode 12):

There's a real-world phd who wrote books where she created the character based on herself. The TV show is based on those books. Where the character also writes books. And then there's an episode about a movie based on those books.

So there's an actor playing an actor playing a character based on books written by a character based on books written by a real world person who based the character on what she does in real life. Kind of.

Of guest stars, Stephen Fry is so good that all the other actors feel fake when he's around.

Comments Are Forever

February 12th, 2026 (permalink)

There was some old Garfield comic where the punchline was "muscles come and go, but fat is forever". It was supposed to be funny. I don't remember why.

Compilers don't see comments. That's kind of the point. However, this means that when the code attached to the comments changes, the compiler doesn't complain that there's a mismatch. Thus, one of these sayings I've found myself repeating goes:

This often happens in old codebases. There's a bug somewhere, or a refactor happens. Code changes, but either comments are ignored or are left alone because it's felt they have some historical value. At best they're irrelevant and could be removed and at worst they are misleading.

I came across some code that was rearranged - a code block was moved from one place to somewhere else - but the comment relevant to the code block was left behind. This was pretty complicated code so the comment was necessary. If the code needs changing in the future, the comment will likely confuse whoever is trying to decipher it in the future. At worst, they might think the comment says what the code should be doing, and will break it.

Maybe in the future someone will write an AI based linter that will flag misleading comments.

C.S.S.C.G.C. 2025

February 2nd, 2026 (permalink)

The C.S.S.C.G.C. (or comp.sys.sinclair crap games competition) for 2025 wrapped and my fishing game is one of the finalists. To quote the aforelinked page, Any one of them could take the crown for "least crap". Unfortunately, some candidates are too humble to accept the glory, fame and wealth that come with hosting next year's event.

Anyway. The winner wasted no time setting up the contest for 2026.

Since there's no theme requirement or anything, I started thinking about a Zamboni game where you'd clear the ice, one tile at a time.

Let's say the project name is "Glacial".

Every time you clear a level, you get another one, which is 4x as big as the previous one. Assuming the first level is 8x6, or 48 tiles, and clearing a tile takes one second, you could clear the first level in under a minute. Second level takes 3.2 minutes, third 12.8 minutes, 52.2 minutes, 204.8 minutes, 819.2 minutes..

Level 7 would be 512x384 or 196608 tiles. Given that we only get about 32k to play with, the tile status needs to be encoded in bits, and that way it will fit in 24576 bytes, so it should easily fit on the 48k speccy. Oh, and clearing that one second per tile is about 55 hours. We're in AAA scale, baby!

To add a loss state let's say we have a timeout, which should be generous, like 25% extra time. That means that for the first level, the time limit is 60 seconds. And for the last one, 245760 seconds (about 68 hours). The optimal strategy for playing the last level would be to do a horizontal zigzag pattern, and you only need to change direction every 512 seconds (about 8.5 minutes). But you can't just forget it due to the timeout. The zamboni stops at the edges; having it crash to the edge would be way too cruel. And/or too easy way to end the game.

Given the size of the playfield, if we're talking 8x8 tiles, the resolution of the last level is 4096x3072 which is a bit too much for the speccy, so there needs to be some kind of scrolling. Luckily I've arbitrary decided that the zamboni moves one tile per second, so there's plenty of time to update the screen. To make it clear that the screen actually scrolls, the uneven ice needs to be pseudorandom and even would have some repeating pattern. These only need to be generated when scrolling.

Assets: the Zamboni moving in 4 directions, some idea of the ice tile, maybe a logo at the top or bottom.

I'm pretty sure it's frustrating enough.

dspguide

January 6th, 2026 (permalink)

Well, I finished reading (most of) dspguide. The first half gave me some insights I had either forgotten, or never learned, including the convolution insight of my previous post.

Other insights were about how FFT works, what additional steps are needed for iFFT to work (mainly synthesizing the negtive frequencies), zero-padding tricks for FFT, and the significance of windowing. I'll probably revisit some old projects and fix some stuff. Maybe.

Other parts of the book were less interesting, going into data compression methods that have nothing to do with DSP, and other stuff which I'm already familiar with, and diving deeper into the Laplace and Z-transform math, which I skipped. I remember being really excited about z-transform in school, as you could turn particle systems into linear functions and jump forward and backward in time - something I never actually did.

Some jokes in the book landed, others were less succesful.

The book also is a product of its time, and it was nostalgic to read about internet speeds using a modem, or performance characteristics of 100Mhz computers. A lot of those things are really out of date or at worst misleading today.

Anyway, the "shortcuts and precalculations" I guessed at in previous blog post probably primarily consist of doing FFT, multiplying, and doing iFFT. Which is fine, except that when your IR consists of a million samples and you're doing real time processing, it still leaves some questions open..

Convolution

January 2nd, 2026 (permalink)

As far as I recall - and I may be totally wrong with this - back in school convolution was considered a "black box" being too complex math to get into. Granted, this was an engineering school, not a science one, so if a black box solves your problem, black box is what you'll use. In any case, the result was that I filed convolution under "stuff I don't need to care about".

Turns out, at least on discrete side (as in, dealing with samples, not continous signals), convolution is stupid simple, if computationally expensive (at least in a naive way).

Let's say we have an impulse response (IR); which might be a recording of a click in a tunnel with all its echoes etc.

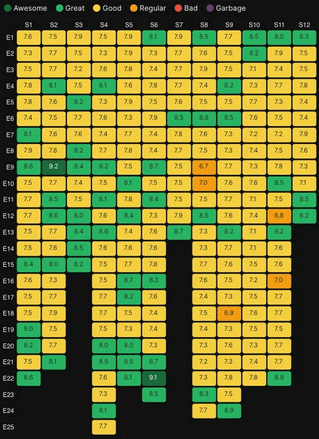

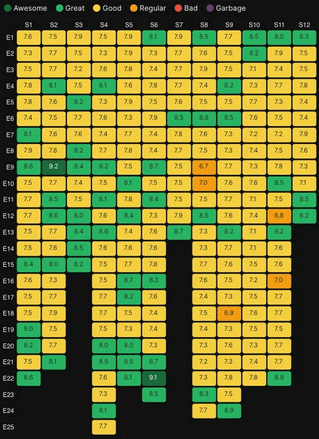

convolve_ir.ogg

impulse response

Now, we can take any other audio sample, which we'll call the signal.

convolve_signal.ogg

signal

And then, for evey sample in the signal, replace the single sample by the whole IR multiplied with the sample. Sum all of these. So if your signal is just a single sample of value 1, you would output the IR.

Let's say we have sample abcd and IR response of ABC:

aA aB aC

bA bB bC

cA cB cD

dA dB dC

The first output sample would be just a (the first sample in signal) multipled with A (the first sample in IR).

Second sample would be aB plus bA.

Third is aC + bB + cA.

Fourth is bC + cB + dA. And so on.

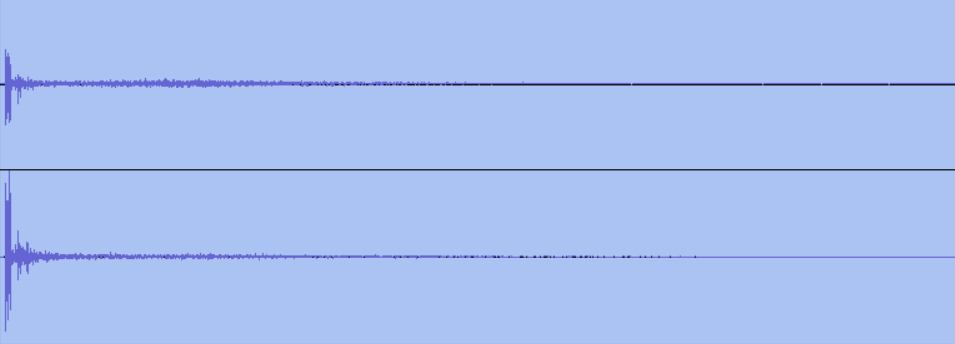

convolve_out.ogg

signal convolved with IR

And that's it. That's the whole thing.

Obviously if you're using an IR of thousands of samples and input of thousands of samples, this gets computationally rather expensive. I'm sure there are shortcuts and precalculations one can do, as there are realtime convolution filter VSTs out there.

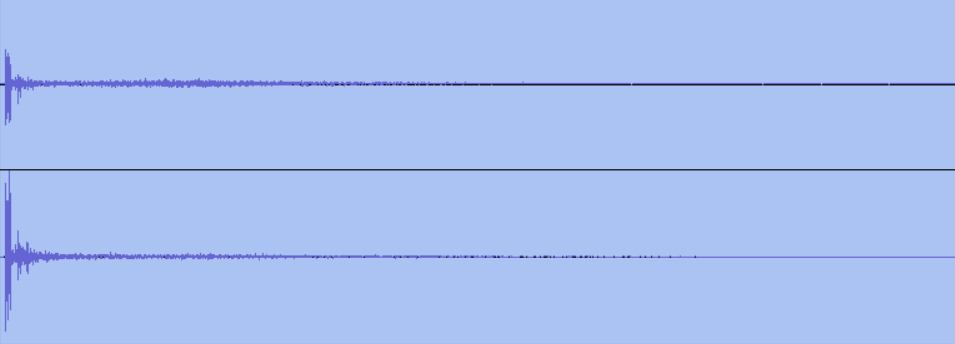

The fun thing is that you can convolve anything with anything; here's the signal convolved with itself:

convolve_out2.ogg

signal convolved with signal

MMXXVI

January 1st, 2026 (permalink)

Here's the new year demo:

And here's a reminder that my Ko-Fi shop exists. If you like my stuff, maybe consider checking it out?

I shouldn't even call these things resolutions, more like a "here's some stuff I should probably look at this year". Considering there's no downside to failing the tasks, I'm not likely to succeed, but hey, if you don't have any goals, you might as well just spend your time staring at a wall..

- I bought an electric guitar last year. I should try to do something with it daily or at least every second day. At one point I had a goal to just touch a synth keyboard daily, and while I'm not a keyboard player by definition, the year-long project clearly improved me. I'll try something similar with the guitar, maybe.

- I have a zx spectrum next game project going. I should get it done, even if it sucked.

- I started reading a DSP basics book. Which may surprise people considering all the audio projects I've done. Anyway, I should go through the book. So far it hasn't really taught me anything new, but it has given me more theoretical background to stuff I've done.

My day cycle is typically; wake up at 7 latest, hit the bed around 22. Due to new year I went to sleep at around 2am, and I've been a total zombie all day. It'll take me a few days to get back to normal routine.