tAAt 2021 new year demo breakdown writeup

Photo based fun again this year:

Like all my new year demos, this is one where I pick an idea and run with it, regardless of what the result is.

The demo ticks a few boxes from my earlier new year demos. First, it's a spinny thingy. Second, it's photo based. Third, it's... long =)

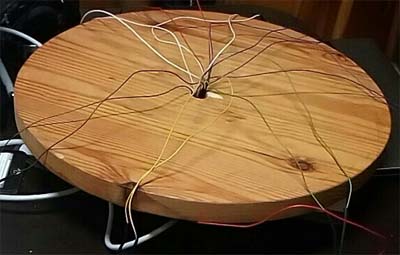

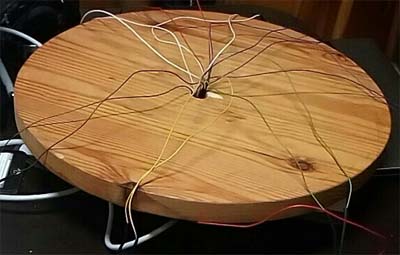

I got my hands on a wooden "cake spinner" and figured it could be used to try the "lighting from several photos" idea I used a few years back. One of the problems back then was camera movement; with a spinner the camera could stay put and the world could move.

So I drilled a hole through the middle so I could wire a bunch of LEDs through it. The idea being that the wires are loose enough so I could get 360 degrees (or preferably more) with the LEDs staying in place.

I bought a few hundred LEDs off eBay, and it was rather stressful to wait if they arrive in time for me to make the demo. When they had not arrived after a month I went to a local store and bought all their white LEDs (all 6 of them) at 1 eur a piece. The ebay order for 300 LEDs had been cheaper. Anyway, the LEDs took their sweet time, but arrived early enough. I did use the more expensive (but likely identical) LEDs for some testing before they did, though.

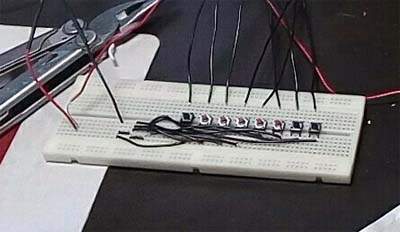

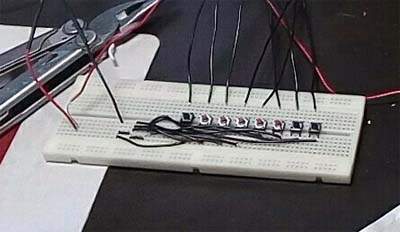

I wired a "controller board" on a prototyping board with a bunch of buttons, pulled the wires through the cake spinner, soldered LEDs on the wires. To see how bright I could make the LEDs I tried with smaller and smaller resistors, until eventually removing the resistor completely. This was probably a mistake in the long run.

The whole thing was powered with a USB power bank.

I cut a piece of cardboard on top of the wooden spinner. I found an old tAAt t-shirt that had been ruined during painting some furniture from our rag box and cut the logo side off, hot-glued it to the cardboard, cut holes for the LEDs, and fixed the cardboard on the spinner with tape.

In order to get consistent steps between photos, I made a strip of paper that looped around the spinner, took it out and then folded it until I got the 31 creases (so if you count to 32, you get to the original position). I then took a post-it note, drew a line across it, stuck it on the table below the spinner and thus had a reference to align the creases.

As a camera I used a phone on a tripod held by a phone adapter. I tried to get earbud button to take the photos so I wouldn't need to touch the phone, but that didn't work out, so I had to very carefully press the screen for each photo.

I used the Android Open Camera app to take photos, so I could fix brightness and focus settings, which could really blow the whole thing up if there were any automation there.

I put a bunch of knick-knacks on top of the spinner, killed the lights and started taking photos. Align the spinner on a crease, press first button on the controller, snap a photo, press second button, snap a photo, repeat until all buttons have been pressed, align to the next crease, etc.

Early on during taking the photos one of the LEDs died - if you watched closely, you may have noticed that one of the LEDs never light up. It's possible it's due to the over voltage, or it's possible that some of my solders gave up when a cable tugged when rotating the spinner. Later on a second LED died, but luckily I had already taken enough photos for a full circle.

I built a proof of concept version that loaded up all the photos for a rotating animation, adding the photos together. It worked, but loading all the jpg textures took ages. I converted the textures to S3TC compressed textures which take more disk space but loaded instantly. Hey, what's 200 megs of textures between friends?

As jpg:s, the textures had taken 20 megs, which already felt like a lot, but I really didn't want to spend half of my development waiting for textures to load. I guess I could have done S3TC compression on the fly which would probably have been faster than loading RGBA textures..

Code-wise everything is plain C/C++, basic OpenGL 1.2 using SDL2 as bootstrap. The photos are drawn as full screen textured quads with additive blending. I did consider going to shaders which would have let me do things like playing with the contrast dynamically, but didn't go there due to time constraints.

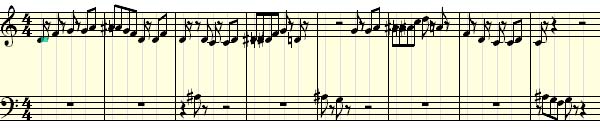

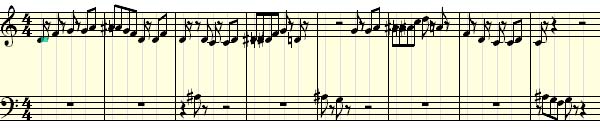

For music, I got this idea of trying something like bolero, where a bunch of instruments play the same theme over and over again, building on top of the previous iteration. While walking back from day-care one morning, I whistled the theme and when I got back home I recorded my whistling so I wouldn't forget it - I did actually forget it promptly afterwards, so I'm happy I had the recording.

I hammered the notes into Reaper, and played them with a bunch of virtual instruments. I varied the note timings and octaves a bit between instruments, as some made more sense as hammered notes while others are longer notes. I also humanized the notes so it feels a bit more lively.

If I was someone who actually new anything about music, I would probably have made more improvisations to the different instruments. For the drums, I took a few phrases from the NI Studio Drummer.

After tuning the volumes so everything felt like it might work together, I rendered all the instruments separately, along with a silent track.

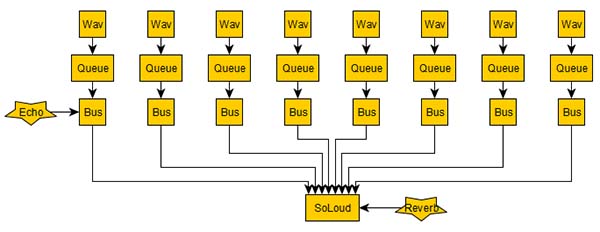

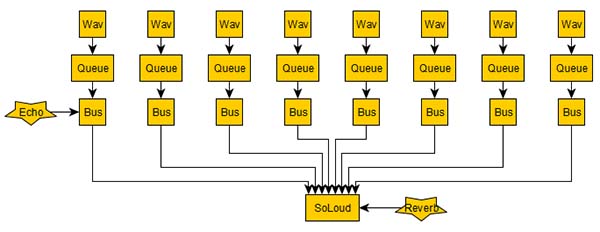

To play the audio out, I (naturally) used SoLoud. Since I wanted to get visualization information out of each of the instruments, I set up a bus for each. Each bus has a queue playing into it, and I queued either the desired instrument or the silence to all of the busses so they stay in sync. At one point of development the silent block was not of the correct length, which lead to a very interesting cacophony - almost interesting enough to actually use, but I decided against it.

Every time the queues get empty enough, a bunch of new samples is queued. I also added a couple of real time DSP filters; there's a global reverb filter, and the bell bus has a delay filter. The samples themselves are relatively dry, so a global reverb works.

I generate a playlist of 240 different combinations where at least 2 instruments are active at the same time, and these are shuffled before the demo starts. The order is the same every time, though. I then overwrite the first few items in the playlist with specific instruments to get a bit of control for the intro section.

I thought about using the MIDI data to control the LED brightness, which might have worked better than what I went with, but on the other hand it might also have just made all the LEDs blink at exactly the same time, so it might have been worse.

The LED brightness is based on the "apparent" bus volume. There's several reasons why this doesn't work all that well (and why most audio visualizers look like crap), such as the fact that it reacts to the sound instead of predicting it - and since our brains are wired to know that light moves faster than sound, the result is a bit odd. I suppose I could have fixed that by creating a delay filter to delay the actual sound output by some milliseconds or something, but didn't really look into it.

I checked what the highest value of each bus is and scaled the LED brightness appropriately, so each LED should be about as bright. For a bit of color, there's a little bit of HSV2RGB conversion going on with relatively low saturation, making all of the LEDs different colored, cycling the hue.

As a hook to make people listen to the theme enough times to let the earworm to make a cosy home in their brains, the demo shows what are possibly the slowest greetings yet: one per 16 seconds. I doubt most viewers will watch the demo from beginning to end, but the hook is still there.

Based on this experience, what would I do differently? First, the camera was way too close to the target. I'd back it away so that the whole spinner along with all its items is in frame. Second, the LEDs ended up being too bright (even though I was afraid they might not be bright enough). The number of frames - 31 for a cycle - takes a lot of disk space, while not quite being enough.

Maybe it would be possible to play N videos on top of each other, which might not take all that much more disk space while encoding the frames more efficiently. Making the rotation consistent between light passes would require the whole thing to be motorized, though, and would add a lot of accuracy issues making the whole thing much more complicated.

Or maybe the photos could have been used to generate 3d mesh and textures, which may have taken less space plus would have let a virtual camera look at things at any angle. Considering all the glassware I used and all the lens flares, I doubt that would have worked for this scene.

Code wise things went well. There's always room for more polish (I didn't even bother with quit fadeouts). For audio, I could have used someone who knows about music =), but other than that, there could have been several versions of the theme for each instrument just for more variance, or I could have included more instruments and swapped between them in each "bus". But I doubt these would have made much of a difference.

And there we go. This year can't possibly be worse than the last one, now can it?

Comments, questions, etc. appreciated.